A Novel Object Classification Technique

Project Github | Project Writeup | Project partner attribution: Sander Miller

Overview

For the final project of my Data Structures and Algorithms class, my project partner and I challenged ourselves to create a new object classification algorithm that would expand our knowledge in graph theory, computer vision, and search algorithms. In doing so, we:

- Performed a literature search in the intersection between graph theory and object classification.

- Devised and implemented a novel object classification algorithm that combines different computer vision techniques (like Hough transforms) and utilizes simulated annealing search.

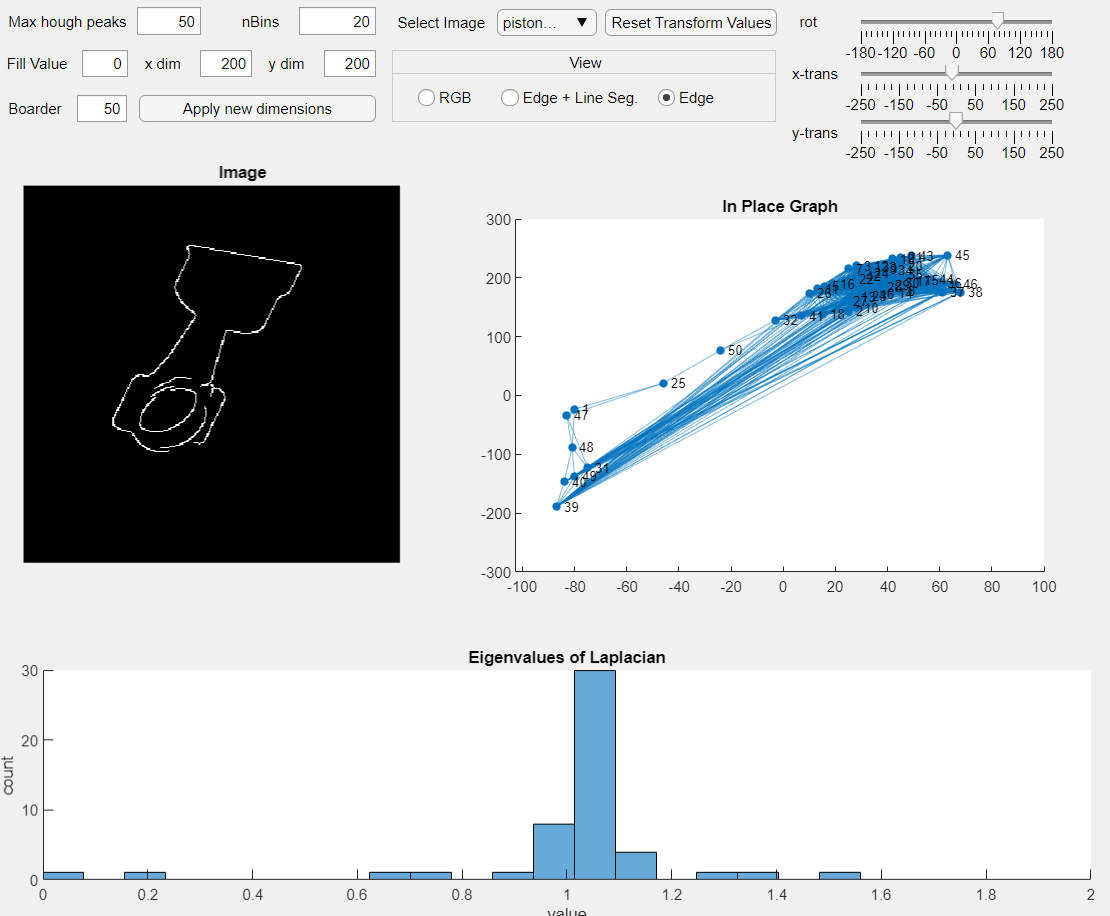

- Created a script for dataset generation, a MATLAB GUI for live visualization into the pre-processing stages of our algorithm, and a script to generate confusion matrices that characterize the performance of our algorithm.

Motivation and Process

The problem of matching a 3D model to its corresponding instance in the real world is a well explored problem. Often, it is framed as camera pose identification with respect to a marker-less object, where the object has a known geometry. However, to determine which 3D model to look for in a scene without user input requires either an advanced machine learning algorithm or a fairly exhaustive template search.

In exploration of this problem of identifying an instance of an object in an image, we consider the narrower but related problem of classifying edge-detected images with their identical but rotationally and translationally transformed counterparts. Our process is compartmentalized into two main parts: creating an embedding of the training images and comparing those embeddings to the test images at runtime.

My Contribution

I contributed in all aspects of this project, but took the greatest ownership in our algorithm’s utilization of graph theory and simulated annealing search, as well as led development of the interactive GUI.

Utilization of Graph Theory

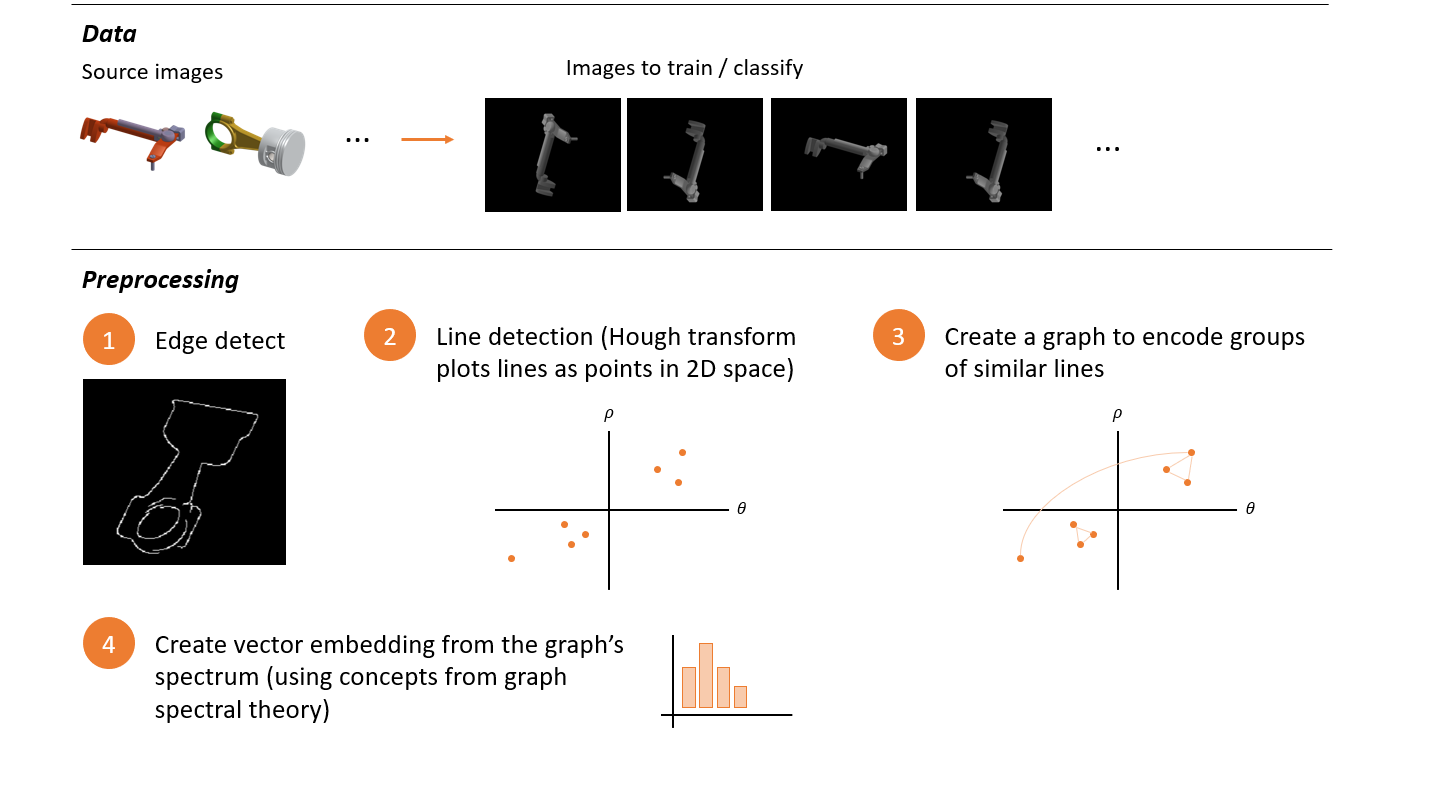

To create a vector embedding of an image, we utilized the 4-step process described below:

The motivation for this process is to create an embedding that stores information about the structure of the image by encoding the relationship between lines present in the image. To do this, we converted the peaks (salient points) from the images’ Hough transforms into graphs. Then, to compare the similarity of graphs, we computed the graphs’ spectra.

After exploring multiple different methods (such as determing graph is omorphism or using an approach from information networks predicated on the hub and authority relationships), we landed on using graph spectra to extract information about the topology of the graph and the Wasserstein metric to compare the graph spectra. I played a key role in performing the literature search on this topic and identifying the relevant takeaways from graph spectral theory to inform the implementation in code.

Interactive GUI

To test our design decisions and refine parameters for each step in the preprocessing pipeline, I created the interactive application that enables real-time visualization of each of the four steps shown in the above diagram. By creating this app, we could validate our dataset generation techniques and see in real-time how our graph embeddings responded to transformations of the input images.

Simulated Annealing

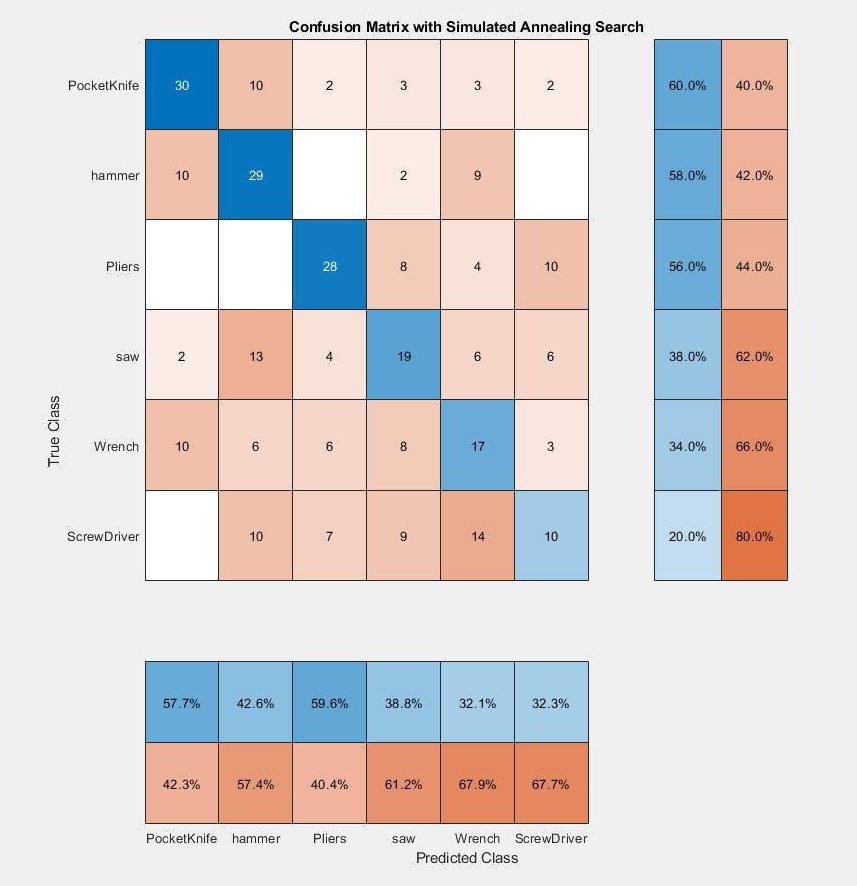

Comparing the embeddings entailed computing the Wasserstein metric - an expensive computation. Although we were able to characterize our algorithm using linear search, I also implemented a simulated annealing search algorithm - a type of search algorithm that explores more options than those that strictly represent improvement. By setting an upper bound on the number of iterations of the algorithm, the simulated annealing had a constant time complexity (albiet with a high coefficient).

Consequently, the challenge was to create a heuristic that would explore a fraction of the total search space while converge on the correct solution. For the algorithm’s heuristic, I implemented a part stochastic, part deterministic heuristic that would enable sufficient exploration of the search space and convergence on the correct solution.

Results and Future Work

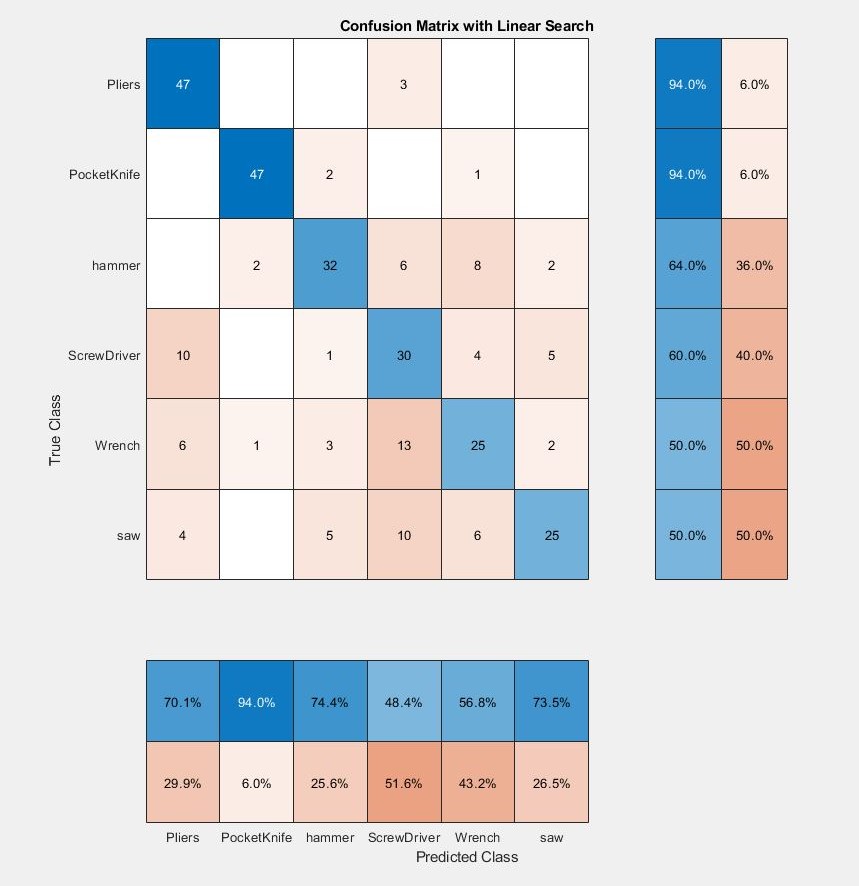

With a data set of eight images, we achieved an accuracy that simultaneously suggests that our graph embedding works and that there is room for improvement. Two points of further improvement include:

- Improving the invariance of the embeddings to rotational and translational transformations.

- Validating whether the approach used to generate embeddings works well with larger data sets.

Below are the confusion matrices that we generated for linear and simulated annealing search respectively:

Project Write-up

The algorithm my project partner and I devised along with our literature references are described in detail in our Project Writeup.

Abstract

We propose an algorithm to quickly and accurately classify edge-detected images of isolated objects. This is motivated by the general problem of matching objects observed by cameras to corresponding 3D models’ wire-frame renderings. Our algorithm uses a low-dimensional embedding of the edge-detected image that is a histogram-representation of the spectra of the graph created using the relationship of peaks in the image’s Hough transformation. We narrow the problem scope to matching edge-detected images to rotated and translated instances of themselves, and see success rates of up to 90% in classification. We employ both linear search and simulated annealing optimization methods and compare their results.