Followbot

Project Github | Project Website | Vision System Demonstration | Project partner attribution: Kristin Aoki, Meagan Rittmanic, Elias Gabriel, Olivia Seitelman

Overview

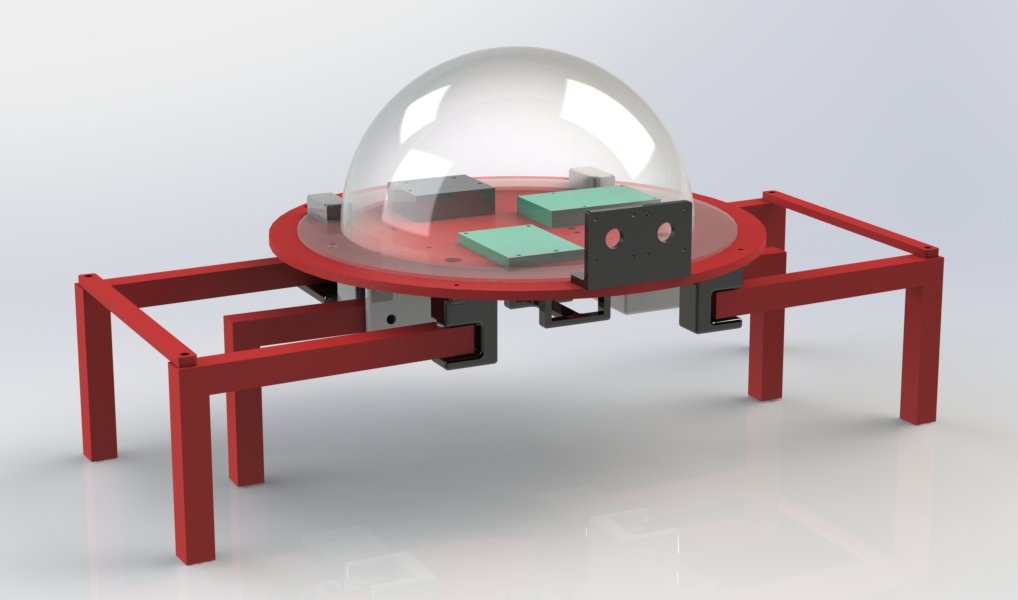

“Followbot” is a robot created by my project team in our Principles of Engineering class with the objective of navigating its way around obstacles to follow a person in its field of view. The robot is completely autonomous, propelled by a hexapod design, and performs all computation onboard and untethered using a Rapsberry Pi and Arduino Uno.

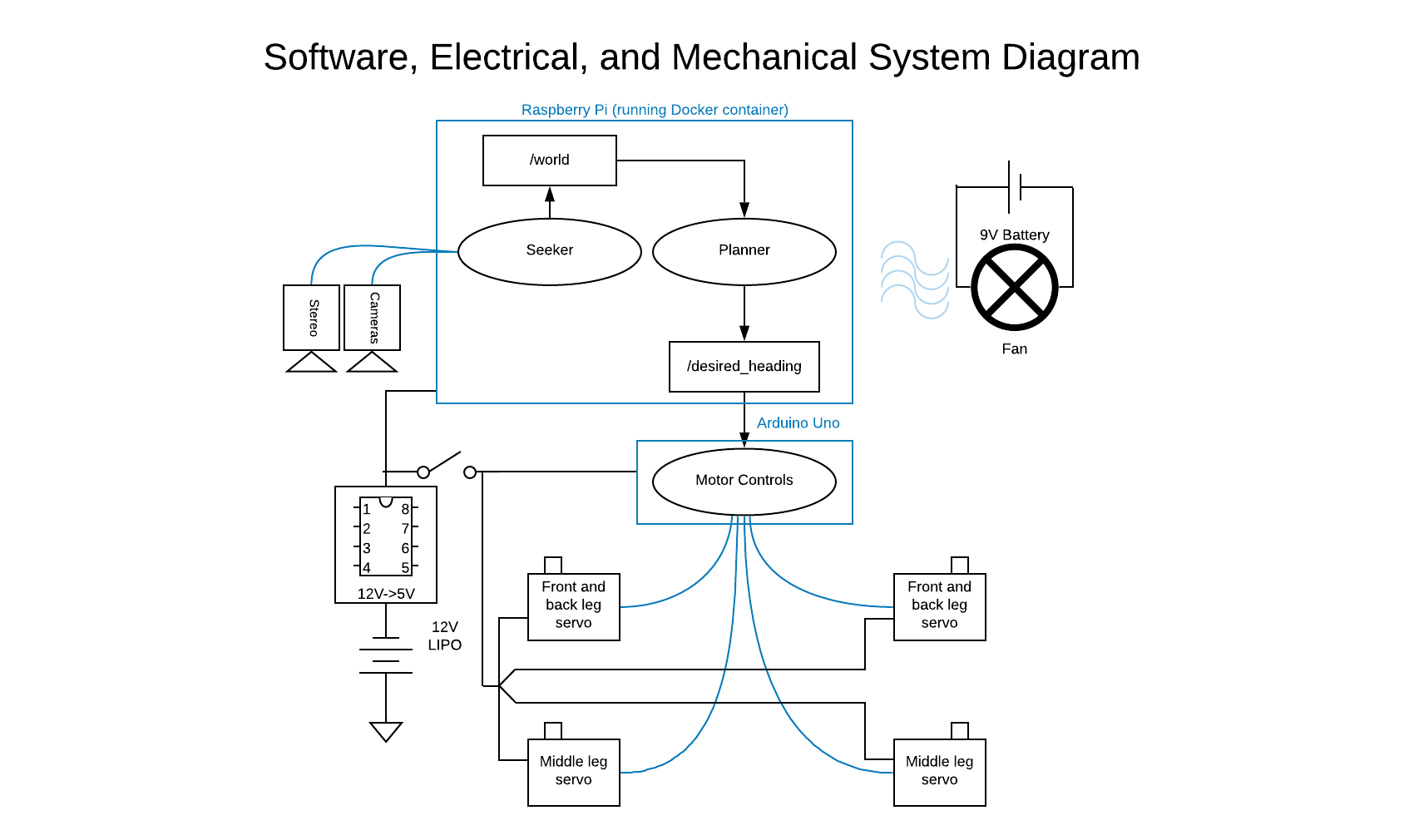

This project was an exercise in sub-system integration and incorporating computer vision capabilities into a robust software system; below is a diagram I made illustrating the connections between electrical, mechanical, and software systems:

You can read about each of the subsystems here and follow our progress from start to finish here.

Vision System

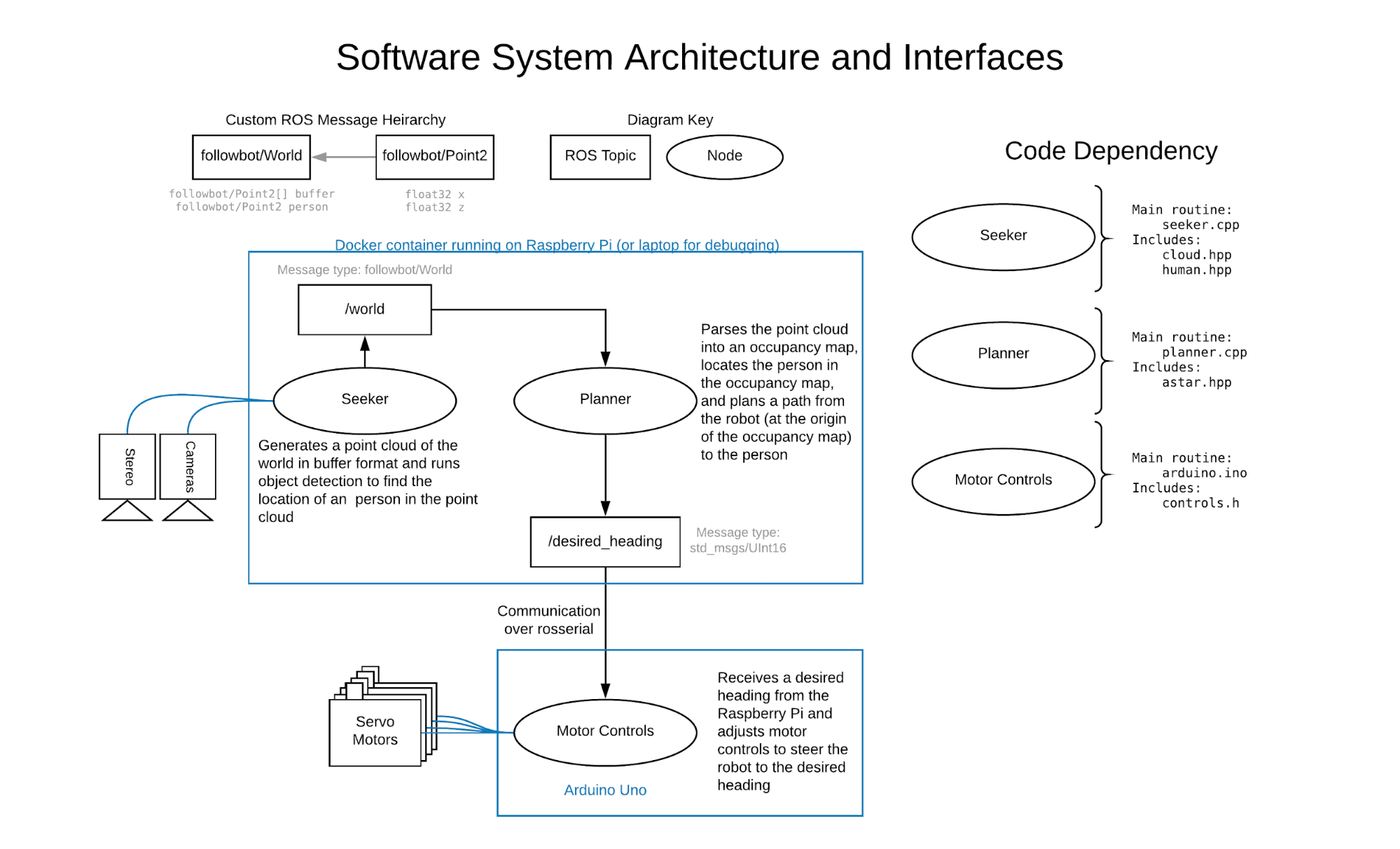

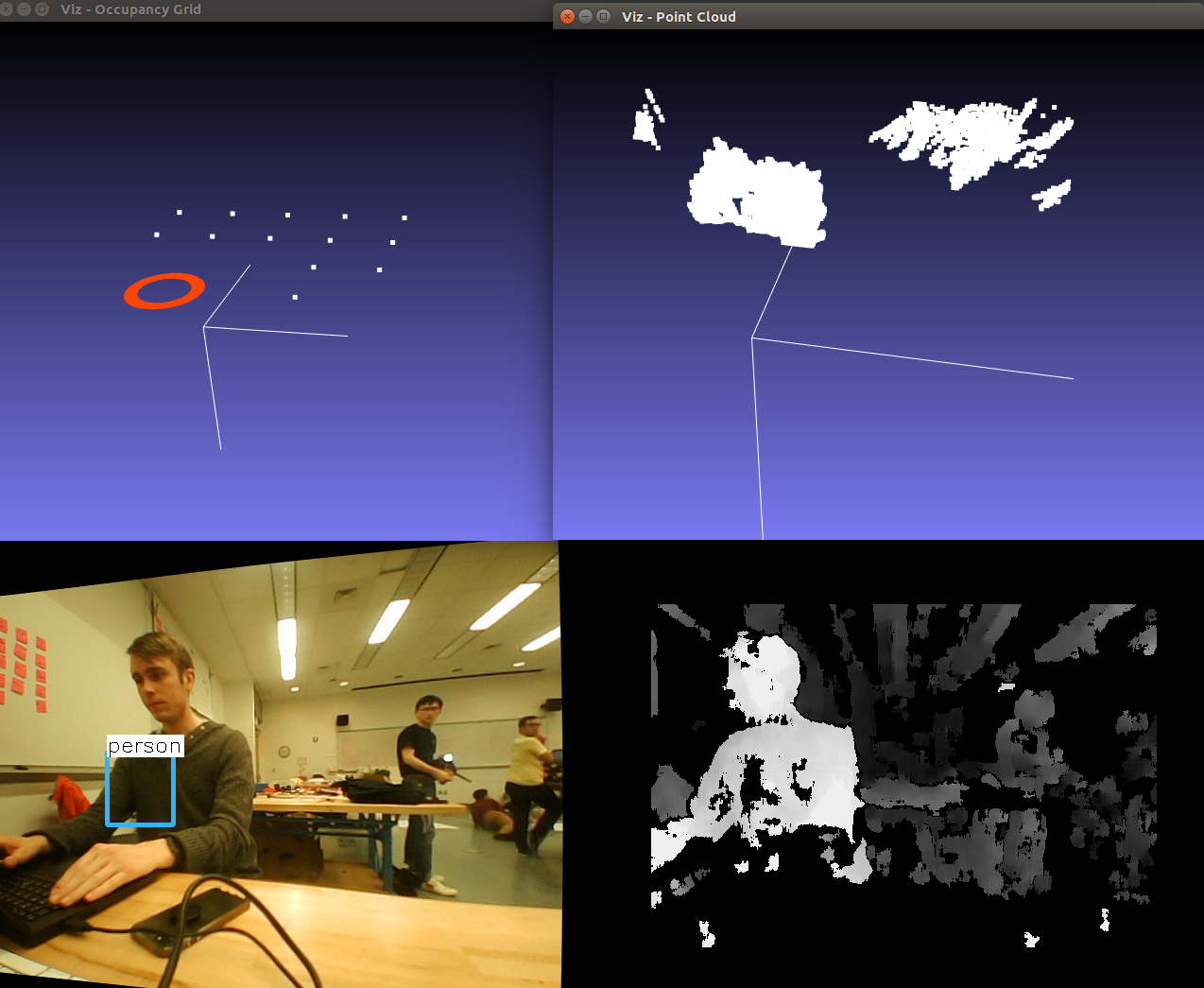

My primary contribution to this project was in the software system: I created a majority of the computer vision code (using OpenCV in C++) and played a key role in using the ROS (robotic operating system) framework to compartmentalize (and parallelize) key processes. At a high level, the robot’s stereo cameras create a depth map of the world by comparing their frames and utilizing their intrinsic and extrinsic information collected during calibration; a neural network then processes one of the frames, identifies the location of a person in the frame, and then correlates that location in the camera frame into a location in the real world using the point cloud created from the depth map.

The point cloud is then parsed into an occupancy grid, which is then used by an A* search algorithm I wrote to find a path from the robot to the destination that is the person; this path informs the immediate change in heading that the robot should make. The video below provides a visualization for this functionality (although the A*-found path is not shown).

- In the top left, a 2D occupancy grid of the camera’s surrounding environment with the red circle indicating the presence of a person,

- In the top right, a 3D point cloud of the camera’s environment,

- In the bottom left, a (rectified) view from one of the cameras annotated with object detection, and

- In the bottom right, a depth map from the stereo cameras.

Software Architecture Overview

These capabilities were separated into two separate nodes:

- Point cloud creation and identification of person in the point cloud

- Point cloud parsing and path finding; calculates heading to send to the Arduino Uno that is controlling the motors.

A complete description of the software system can be found here. There’s a lot more to read about, including our use of docker containers to make our development environments consistent and optimized for their respective hardware and to make our development pipeline seamless: different builds of the code are automatically triggered depending on whether the code is compiled on our laptops or on the onboard Raspberry Pi. Besides ease of development, the result of our optimizations dropped the update cycle time down from over 4 seconds to 300 milliseconds.